Breadcrumbs navigation

Beyond alternative: assessment as the forgotten piece in online learning

As part of our joint 'Teaching politics and IR online: “design matters” webinar series', run in conjunction with the Political Studies Association (PSA), speakers Simon Lightfoot, Simon Rofe and Helen Williams have put together this complementary article on assessment and feedback.

The discipline of Politics and International Relations, with some honourable exceptions, has remained fairly conservative in its assessment methods, while many disciplines have moved on considerably from the classic timed exam/coursework essay assessment regime. The challenges of the COVID-19 pandemic create a window of opportunity within higher education for better assessment design. Here we look at:

- Barriers to change

- “Bake in, don’t bolt on” innovative assessment

- Don’t forget the F-word (feedback)!

Barriers to change

One of the most common barriers to change is institutional constraints, such as the structure of the form for module approval paperwork. Administrative systems have often been set up to process traditional assessment regimes and do not appear to create opportunities for more innovative assessments. However, this constraint is often illusory: ‘coursework’, for example, can encompass many things beyond a traditional essay. Many academics can testify that there is often more opportunity for change and innovation, and that a careful discussion with peers, senior managers and administrators can find ways around this constraint.

When designing new assessment regimes, it is important to consider:

- Module-level assessment: does the proposed assessment assess the module’s stated learning aims and outcomes? If not, is this because the LOs need to be updated, or because the assessment needs to be refocused? A balance is needed therefore.

- Programme-level assessment: how does the proposed assessment fit in with programme-level outcomes, scaffolded building of skills, and providing students with the opportunity to attempt each type of assessment multiple times?

- Iterative assessment: how does the assessment encourage a reflective learning process that allows students to attempt similar formative tasks before summative assessments? How do the feedback and outcome of each assessment link to the next assessment?

- Assessment for learning, not just of learning: assessment is one of the greatest tools we have to leverage student behaviour and increase their engagement with the subject matter. Rather than viewing assessment as something that happens at the end of the module to check they’ve learned the content, assessment should be a core part of the learning process, inspiring students to deeper learning.

- Authentic assessment: how does the assessment mirror the conditions and types of tasks that students are likely to encounter in the ‘real world’ after graduation? From this perspective, timed, closed-book exams are one of the most inauthentic forms of assessment, as it is a format that students are highly unlikely to encounter after graduation.

Diversity of assessment – the Goldilocks balance

Politics and IR could be much more creative in assessments, but it is important not to introduce greater diversity for the sake of it without taking in the wider picture of what is happening across students’ degrees. Students are often just as conservative as staff in approaching new assessment types and need to have time and opportunities to become proficient in different styles of writing. That said, it is worth considering introducing more ‘authentic’ assessments. Some inspirations to consider can be found in Table 1 below.

Table 1 - Examples of assessment types

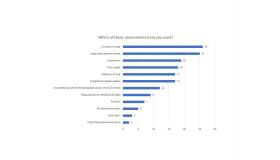

Results from a straw poll of webinar attendees uncovered a positive trend towards greater use of reflective writing in the discipline (Figure 1). Reflective writing can be one of the most positive ways of forcing students to engage critically with their own learning process and increase their skills in critical analysis and self-efficacy, yet it is also one of the areas that our students are least comfortable in taking on. They have often been trained very strictly against use of the first person and can struggle to understand what our expectations are in evaluating reflective writing.

Figure 1 - Webinar audience responses of use of assessment types

Don’t forget the F-word: how to maximise feedback opportunities

Just as we should look at the module holistically when we design assessment, we should also make sure that we have incorporated opportunities for feedback and gauging understanding. This does not necessarily need to be in the form of tutor-issued, formal, written feedback: expanding our (and our students’!) understanding of what feedback is and when, where and how it happens is key to helping students engage with it more.

One source of feedback is through careful use of peer review activities. Many assessment tools, including within VLEs and from external providers like Turnitin, have inbuilt peer review tools that allow for double-blind peer review activities, which can help both the reviewer and the reviewee feel more confident. There is a wealth of evidence demonstrating that students learn more from evaluating each other’s work than they do from feedback teachers provide.

It is very important never to prompt students to award each other a mark, however: this can be very damaging to both those receiving the mark and, perhaps not obviously, those marking it, When it has been done those marks are often wildly incorrect. Instead, careful use of open questions can help the reviewer to assess the work more critically and help the reviewee to improve their work, even if the reviewer’s assessment is incorrect. Instead, more helpful questions can prompt reflection from both sides, such as:

- Summarise the main argument in a limited number of words. What are the main sections of the structure? If the reviewer can’t identify these easily, then they probably need to be clearer.

- What is one thing the author did well? This is an important part of training students that critical analysis – a transferable skill - does not just mean highlighting negative points, and even weak submissions will have done at least one thing correctly.

- What is one thing the author could improve? It is important not to create a situation where a reviewer returns a demoralizingly long list of necessary improvements. Instead, focusing on one item can make the improvement more manageable. (This is also a lesson academics could learn: it is better not to highlight more than three key areas for improvement in our assessment feedback, as it can otherwise become so lengthy and negative that it is overwhelming.)

Another way to encourage the reflective learning process and engagement with feedback is to include self-reflection as an assessed component within another assessment. This rewards students for engaging with the learning process, which can help them to ‘grasp the nettle’ and deal with the discomfort of reading feedback. Helen asks her students three key questions at the beginning of their coursework submissions:

- How have you improved your submission in response to feedback from your tutor and peers?

- What would you do differently, knowing what you know now?

- How could your submission be improved (realistically)?

Prompting students with these questions before submitting the largest part of their summative coursework was demonstrated to increase the rate of students collecting their final module feedback substantially compared to modules where this reflective process wasn’t instigated.

Finally, we can create opportunities for feedback dialogue without compromising anonymity in the marking process. This dialogue and personalisation of feedback is also more likely to encourage students to read it and engage in the reflective process because they are receiving feedback on items they are interested in. For example, I ask students to identify up to three things they would like to receive feedback on, and three things they are not interested in. This means that I spend less time writing generic feedback with the same focus for every student and demonstrates to the student that I am listening to them. However, it should be noted that, when done badly, students may stop engaging with these prompts: if students specify areas for feedback that are largely ignored by markers or are responded to with generic comments, it trains them that this is not a meaningful process.

Authors

Simon Lightfoot is Pro Dean for Student Education and Professor of Politics at the University of Leeds.

Simon Rofe is the inaugural director of the Centre for International Studies and Diplomacy (CISD)'s Global Diplomacy Masters programme (Distance Learning) at SOAS.

Helen Williams is an Associate Professor of Politics at the University of Nottingham.